Engine

AI engines for playing draughts.

Quick Start

from draughts import Board, AlphaBetaEngine

board = Board()

engine = AlphaBetaEngine(depth_limit=5)

# Get best move

best_move = engine.get_best_move(board)

board.push(best_move)

# Get move with evaluation score

move, score = engine.get_best_move(board, with_evaluation=True)

Engine Interface

- class draughts.Engine(depth_limit: int | None = 6, time_limit: float | None = None, name: str | None = None)[source]

Abstract base class for draughts engines.

Implement this interface to create custom engines compatible with the

Serverfor interactive play and testing.- Attributes:

depth_limit: Maximum search depth (if applicable). time_limit: Maximum time per move in seconds (if applicable). name: Engine name (defaults to class name).

- Example:

>>> from draughts import Engine >>> import random >>> >>> class RandomEngine(Engine): ... def get_best_move(self, board, with_evaluation=False): ... move = random.choice(list(board.legal_moves)) ... return (move, 0.0) if with_evaluation else move

- abstractmethod get_best_move(board: BaseBoard, with_evaluation: bool = False) Move | tuple[Move, float][source]

Find the best move for the current position.

- Args:

board: The current board state. with_evaluation: If True, return

(move, score)tuple.- Returns:

Best

Move, or(Move, float)ifwith_evaluation=True. Positive scores favor the current player.- Example:

>>> move = engine.get_best_move(board) >>> move, score = engine.get_best_move(board, with_evaluation=True)

AlphaBetaEngine

- class draughts.AlphaBetaEngine(depth_limit: int = 6, time_limit: float | None = None, name: str | None = None)[source]

AI engine using Negamax search with alpha-beta pruning.

This engine implements a strong draughts AI with several optimizations for efficient tree search. Works with any board variant (Standard, American, etc.).

Algorithm:

Negamax: Simplified minimax using

max(a,b) = -min(-a,-b)Iterative Deepening: Progressively deeper searches for time control

Transposition Table: Zobrist hashing to cache evaluated positions

Quiescence Search: Extends captures to avoid horizon effects

Move Ordering: PV moves, captures, killers, history heuristic

PVS/LMR: Principal Variation Search with Late Move Reductions

Evaluation:

Material balance (men=1.0, kings=2.5)

Piece-Square Tables rewarding advancement and center control

- Attributes:

depth_limit: Maximum search depth. time_limit: Optional time limit in seconds. nodes: Number of nodes searched in last call.

- Example:

>>> from draughts import Board, AlphaBetaEngine >>> board = Board() >>> engine = AlphaBetaEngine(depth_limit=5) >>> move = engine.get_best_move(board) >>> board.push(move)

- Example with American Draughts:

>>> from draughts.boards.american import Board >>> board = Board() >>> engine = AlphaBetaEngine(depth_limit=6) >>> move = engine.get_best_move(board)

- Example with evaluation:

>>> move, score = engine.get_best_move(board, with_evaluation=True) >>> print(f"Best: {move}, Score: {score:.2f}")

- __init__(depth_limit: int = 6, time_limit: float | None = None, name: str | None = None)[source]

Initialize the engine.

- Args:

- depth_limit: Maximum search depth. Higher = stronger but slower.

Recommended: 5-6 for play, 7-8 for analysis.

- time_limit: Optional time limit in seconds. If set, search uses

iterative deepening and stops when time expires.

name: Custom engine name. Defaults to class name.

- Example:

>>> engine = AlphaBetaEngine(depth_limit=6) >>> engine = AlphaBetaEngine(depth_limit=20, time_limit=1.0)

- evaluate(board: BaseBoard) float[source]

Evaluate the board position.

Uses material count and piece-square tables. Works with any board variant.

- Args:

board: The board to evaluate.

- Returns:

Score from the perspective of the side to move. Positive = good for current player.

- get_best_move(board: BaseBoard, with_evaluation: bool = False) Move | tuple[Move, float][source]

Find the best move for the current position.

- Args:

board: The board to analyze. with_evaluation: If True, return

(move, score)tuple.- Returns:

Best

Move, or(Move, float)ifwith_evaluation=True.- Raises:

ValueError: If no legal moves are available.

- Example:

>>> move = engine.get_best_move(board) >>> move, score = engine.get_best_move(board, with_evaluation=True)

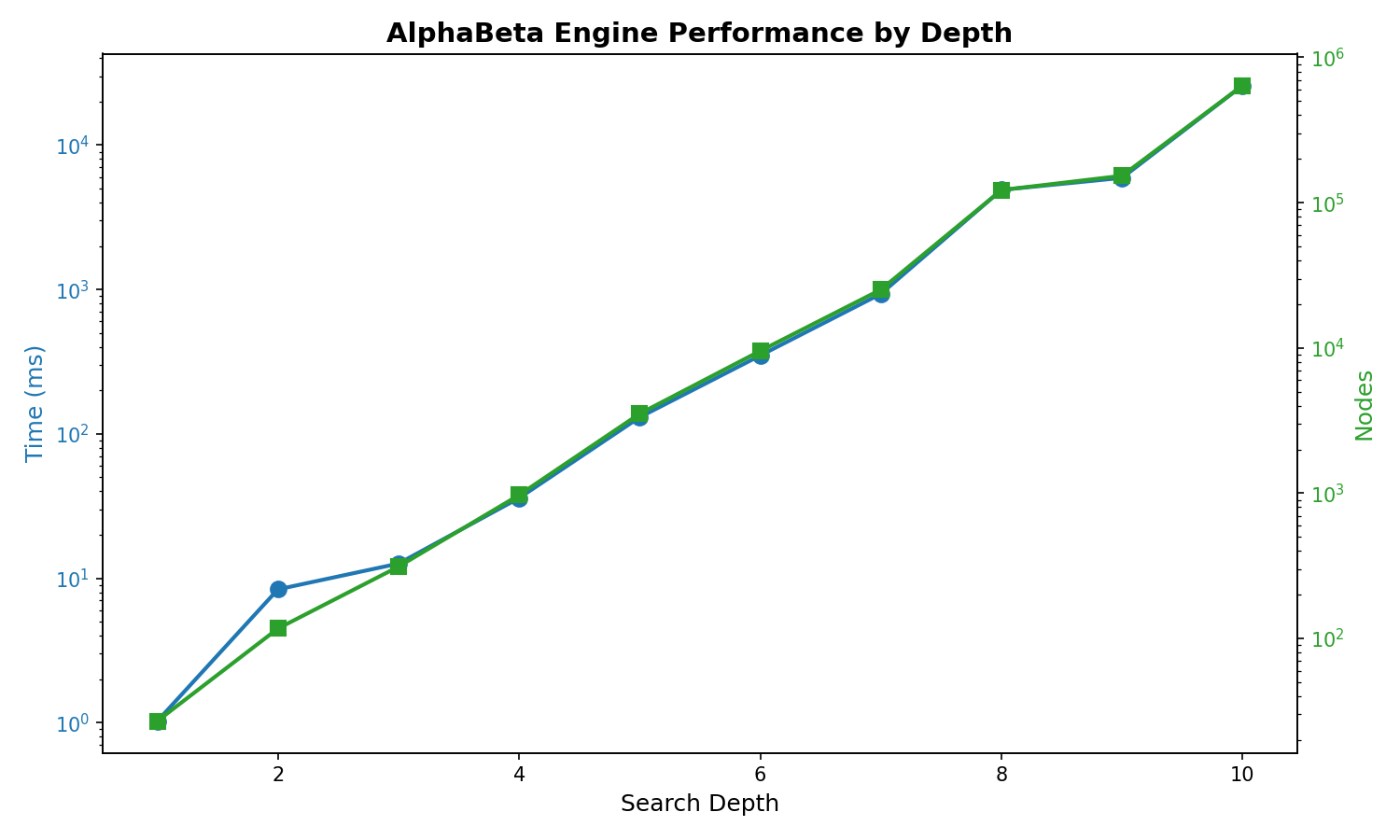

Performance

Depth |

Avg Time |

Avg Nodes |

|---|---|---|

5 |

130 ms |

2,896 |

6 |

350 ms |

9,163 |

7 |

933 ms |

24,528 |

8 |

4.9 s |

122,168 |

Depth 5-6: Strong play, responsive (< 1s per move)

Depth 7-8: Very strong, suitable for analysis

HubEngine

Use external engines implementing the Hub protocol (e.g., Scan).

- class draughts.HubEngine(path: str | Path, time_limit: float = 1.0, depth_limit: int | None = None, init_timeout: float = 10.0)[source]

Engine wrapper for Hub protocol (used by Scan and similar engines).

This class manages subprocess communication with an external draughts engine using the Hub protocol (version 2).

- Attributes:

path: Path to the engine executable time_limit: Default time per move in seconds depth_limit: Maximum search depth (None for no limit)

- Example:

>>> engine = HubEngine("scan.exe", time_limit=1.0) >>> engine.start() >>> move = engine.get_best_move(board) >>> engine.quit()

# Or with context manager: >>> with HubEngine(“scan.exe”) as engine: … move = engine.get_best_move(board)

- __init__(path: str | Path, time_limit: float = 1.0, depth_limit: int | None = None, init_timeout: float = 10.0)[source]

Initialize Hub engine wrapper.

- Args:

path: Path to the engine executable time_limit: Time limit per move in seconds (default 1.0) depth_limit: Maximum search depth (None for no limit) init_timeout: Timeout for engine initialization in seconds

- start() None[source]

Start the engine subprocess and complete initialization handshake.

The Hub protocol initialization: 1. GUI sends “hub” 2. Engine responds with id, param declarations, then “wait” 3. GUI optionally sends set-param commands 4. GUI sends “init” 5. Engine responds with “ready”

- Raises:

FileNotFoundError: If engine executable not found TimeoutError: If engine doesn’t respond in time RuntimeError: If initialization fails

- get_best_move(board: BaseBoard, with_evaluation: bool = False) Move | tuple[Move, float][source]

Get the best move for the given board position.

Implements the Engine interface. Sends the position to the engine, starts a search, and returns the best move.

- Args:

board: The current board state with_evaluation: If True, return (move, score) tuple

- Returns:

Move object, or (Move, score) if with_evaluation=True

- Raises:

RuntimeError: If engine not started or search fails ValueError: If board variant not supported

Example:

from draughts import Board, HubEngine

with HubEngine("path/to/scan.exe", time_limit=1.0) as engine:

board = Board()

move, score = engine.get_best_move(board, with_evaluation=True)

Custom Engine

Inherit from Engine to create your own:

from draughts import Engine

import random

class RandomEngine(Engine):

def get_best_move(self, board, with_evaluation=False):

move = random.choice(list(board.legal_moves))

return (move, 0.0) if with_evaluation else move

Use with the Server for interactive testing.

Benchmarking

Compare two engines against each other with comprehensive statistics.

Quick Start

from draughts import Benchmark, AlphaBetaEngine

# Compare two engines

stats = Benchmark(

AlphaBetaEngine(depth_limit=4),

AlphaBetaEngine(depth_limit=6),

games=20

).run()

print(stats)

Output:

============================================================

BENCHMARK: AlphaBetaEngine (d=4) vs AlphaBetaEngine (d=6)

============================================================

RESULTS: 2-12-6 (W-L-D)

AlphaBetaEngine (d=4) win rate: 25.0%

Elo difference: -191

PERFORMANCE

Avg game length: 85.3 moves

AlphaBetaEngine (d=4): 25.2ms/move, 312 nodes/move

AlphaBetaEngine (d=6): 142.5ms/move, 1850 nodes/move

Total time: 45.2s

...

Benchmark Class

- class draughts.Benchmark(engine1: Engine, engine2: Engine, board_class: type[BaseBoard] = <class 'draughts.boards.standard.Board'>, games: int = 10, openings: list[str] | None = None, swap_colors: bool = True, max_moves: int = 200, workers: int = 1)[source]

Benchmark two engines against each other.

- Example:

>>> from draughts import Benchmark, AlphaBetaEngine >>> bench = Benchmark(AlphaBetaEngine(depth_limit=4), AlphaBetaEngine(depth_limit=6)) >>> print(bench.run())

- __init__(engine1: Engine, engine2: Engine, board_class: type[BaseBoard] = <class 'draughts.boards.standard.Board'>, games: int = 10, openings: list[str] | None = None, swap_colors: bool = True, max_moves: int = 200, workers: int = 1)[source]

- run() BenchmarkStats[source]

Run benchmark and return statistics.

Parameters

engine1, engine2: Any

Engineinstances to compareboard_class: Board variant (

StandardBoard,AmericanBoard, etc.)games: Number of games to play (default: 10)

openings: List of FEN strings for starting positions

swap_colors: Alternate colors between games (default: True)

max_moves: Maximum moves per game (default: 200)

workers: Parallel workers (default: 1, sequential)

Custom Names

Engines with the same class name are automatically distinguished by their settings:

# These will show as "AlphaBetaEngine (d=4)" and "AlphaBetaEngine (d=6)"

Benchmark(

AlphaBetaEngine(depth_limit=4),

AlphaBetaEngine(depth_limit=6)

)

Or provide custom names:

Benchmark(

AlphaBetaEngine(depth_limit=4, name="FastBot"),

AlphaBetaEngine(depth_limit=6, name="StrongBot")

)

Custom Openings

By default, 10x10 boards use built-in opening positions. Provide your own:

from draughts import Benchmark, AlphaBetaEngine, STANDARD_OPENINGS

# Use specific FEN positions

custom_openings = [

"W:W31,32,33,34,35:B1,2,3,4,5",

"B:W40,41,42:B10,11,12",

]

stats = Benchmark(

AlphaBetaEngine(depth_limit=4),

AlphaBetaEngine(depth_limit=6),

openings=custom_openings

).run()

# Or use the built-in openings

print(f"Available openings: {len(STANDARD_OPENINGS)}")

Different Board Variants

Test engines on any supported variant:

from draughts import Benchmark, AlphaBetaEngine

from draughts import AmericanBoard, FrisianBoard, RussianBoard

# American checkers (8x8)

stats = Benchmark(

AlphaBetaEngine(depth_limit=5),

AlphaBetaEngine(depth_limit=7),

board_class=AmericanBoard,

games=10

).run()

Saving Results to CSV

Save benchmark results to CSV for tracking over time:

stats = Benchmark(e1, e2, games=20).run()

stats.to_csv("benchmark_results.csv")

If the file exists, results are appended. The CSV includes:

Timestamp, engine names, game count

Wins, losses, draws, win rate, Elo difference

Average moves, time per move, nodes per move

Total benchmark time

Statistics

The BenchmarkStats object provides:

games: Total games played

e1_wins, e2_wins, draws: Win/loss/draw counts

e1_win_rate: Engine 1’s win rate (0.0-1.0)

elo_diff: Estimated Elo difference (positive = engine1 stronger)

avg_moves: Average game length

avg_time_e1, avg_time_e2: Average time per move

avg_nodes_e1, avg_nodes_e2: Average nodes searched per move

results: List of individual

GameResultobjects

- class draughts.BenchmarkStats(*, e1_name: str, e2_name: str, results: list[GameResult] = <factory>, total_time: float = 0.0)[source]

Aggregated benchmark statistics.

- model_config = {'arbitrary_types_allowed': True}

Configuration for the model, should be a dictionary conforming to [ConfigDict][pydantic.config.ConfigDict].

- to_csv(path: str | Path = 'benchmark_results.csv') Path[source]

Save benchmark results to CSV file.

If the file exists, results are appended. Otherwise, a new file is created with headers.

- Args:

path: Path to CSV file (default: “benchmark_results.csv”)

- Returns:

Path to the saved CSV file.

- Example:

>>> stats = Benchmark(e1, e2, games=10).run() >>> stats.to_csv("results.csv")

- class draughts.GameResult(*, game_number: int, winner: Color | None = None, moves: int = 0, e1_time: float = 0.0, e2_time: float = 0.0, e1_nodes: int = 0, e2_nodes: int = 0, e1_color: Color = Color.WHITE, opening: str = '', final_fen: str = '', termination: str = 'unknown')[source]

Result of a single game.

- model_config = {'arbitrary_types_allowed': True}

Configuration for the model, should be a dictionary conforming to [ConfigDict][pydantic.config.ConfigDict].